Abstract

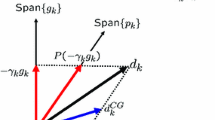

The conjugate gradient method is particularly useful for minimizing functions of very many variables because it does not require the storage of any matrices. However the rate of convergence of the algorithm is only linear unless the iterative procedure is “restarted” occasionally. At present it is usual to restart everyn or (n + 1) iterations, wheren is the number of variables, but it is known that the frequency of restarts should depend on the objective function. Therefore the main purpose of this paper is to provide an algorithm with a restart procedure that takes account of the objective function automatically. Another purpose is to study a multiplying factor that occurs in the definition of the search direction of each iteration. Various expressions for this factor have been proposed and often it does not matter which one is used. However now some reasons are given in favour of one of these expressions. Several numerical examples are reported in support of the conclusions of this paper.

Similar content being viewed by others

References

E.M.L. Beale, “A derivation of conjugate gradients”, in: F.A. Lootsma, ed.,Numerical methods for nonlinear optimization (Academic Press, London, 1972) pp. 39–43.

H.P. Crowder and P. Wolfe, “Linear convergence of the conjugate gradient method”,IBM Journal of Research and Development 16 (1972) 431–433.

R. Fletcher, “A Fortran subroutine for minimization by the method of conjugate gradients”, Report R-7073, A.E.R.E., Harwell, 1972.

R. Fletcher and M.J.D. Powell, “A rapidly convergent descent method for minimization”,Computer Journal 6 (1963) 163–168.

R. Fletcher and C.M. Reeves, “Function minimization by conjugate gradients”,Computer Journal 7 (1964) 149–154.

D.G. Luenberger,Introduction to linear and nonlinear programming (Addison-Wesley, New York, 1973).

M.F. McGuire and P. Wolfe, “Evaluating a restart procedure for conjugate gradients”, Report RC-4382, IBM Research Center, Yorktown Heights, 1973.

E. Polak,Computational methods in optimization: a unified approach (Academic Press, London, 1971).

M.J.D. Powell, “Some convergence properties of the conjugate gradient method”,Mathematical Programming 11 (1976) 42–49.

G. Zoutendijk, “Nonlinear programming, computational methods”, in: J. Adabie, ed.,Integer and nonlinear programming (North-Holland, Amsterdam, 1970) pp. 37–86.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Powell, M.J.D. Restart procedures for the conjugate gradient method. Mathematical Programming 12, 241–254 (1977). https://doi.org/10.1007/BF01593790

Received:

Revised:

Issue Date:

DOI: https://doi.org/10.1007/BF01593790